How Violent Extremist Groups Have Hijacked Social Media

The Internet -- and especially social media platforms -- revolutionized the way people communicate, share knowledge and ideas, and distribute information. And while this global connectivity has often been a force for good, tech platforms have also enabled extremist groups to propagandize, radicalize, recruit new members, broadcast attacks and even fundraise on an unprecedented scale.

The sad reality is that extremists have been more creative, aggressive and insidious in their use of social platforms than governments and tech firms have been in tracking, stopping and preventing them from hijacking the Internet.

While most social media platforms have officially banned terrorist and extremist groups from operating on their platforms, research by the Counter Extremism Project, an ACCO member, shows that tech firms have broadly failed to acknowledge the severity of the problem, enforce their own rules or implement appropriate safeguards against the amplification of violent content.

This failure has facilitated violent groups, including designated terrorist organizations like ISIS and homegrown extremist groups like the Proud Boys to organize and grow, by giving them, in effect, an online megaphone.

An excellent example is the designated terror group Hezbollah, which has used Facebook to broadcast propaganda, recruit for attacks, report on the activities of its leadership and even shill for money. Facebook has enabled Hezbollah to cheaply and instantaneously reach a global audience, addressing local, national and international issues in real time. Some analysts even suggest Hezbollah’s influence in the Levant and beyond depends a great deal on the party’s sophisticated use of social media to conduct grassroots advocacy.

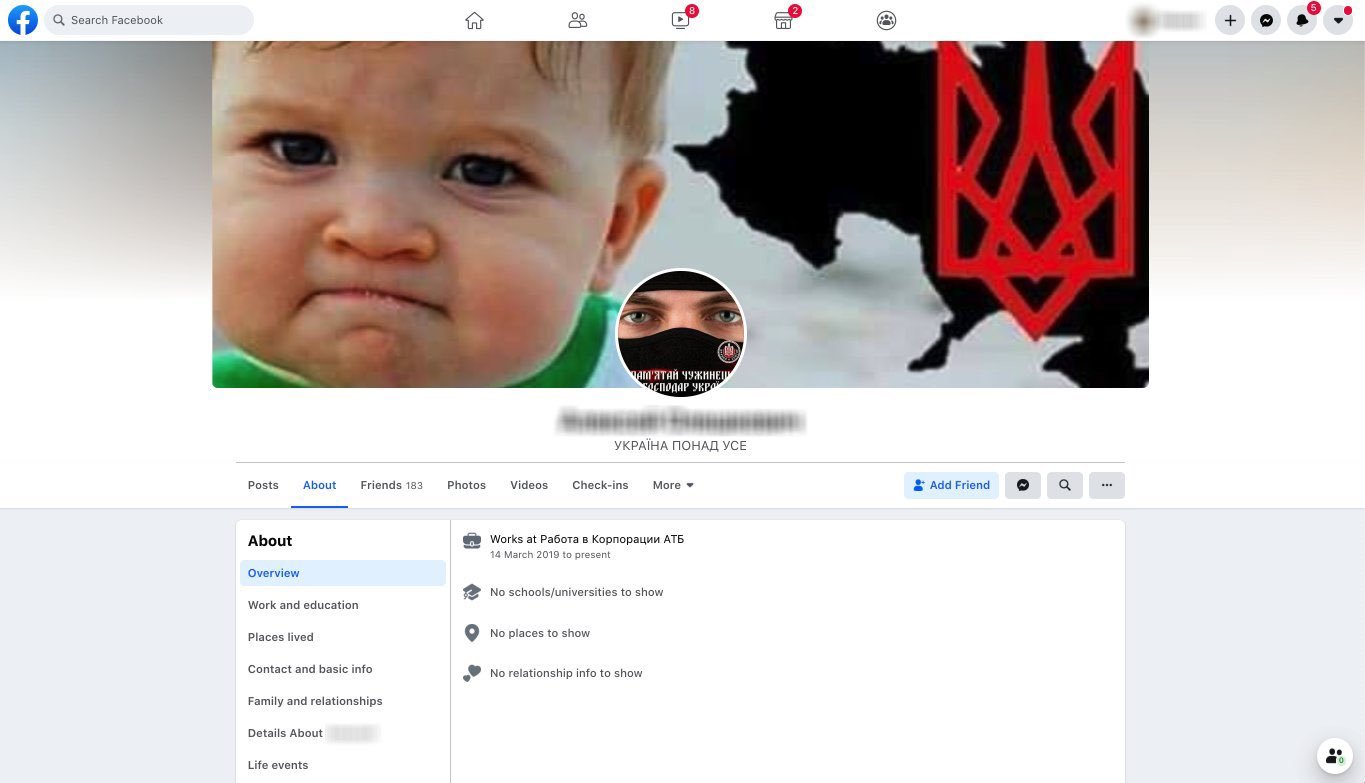

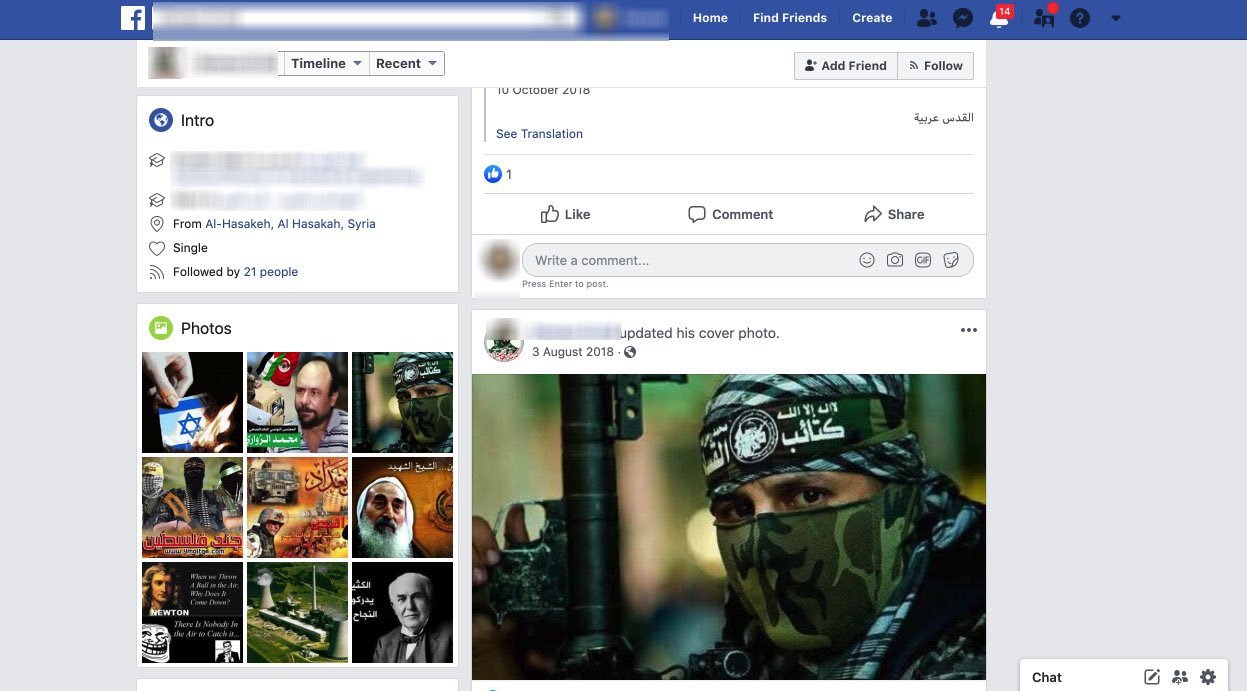

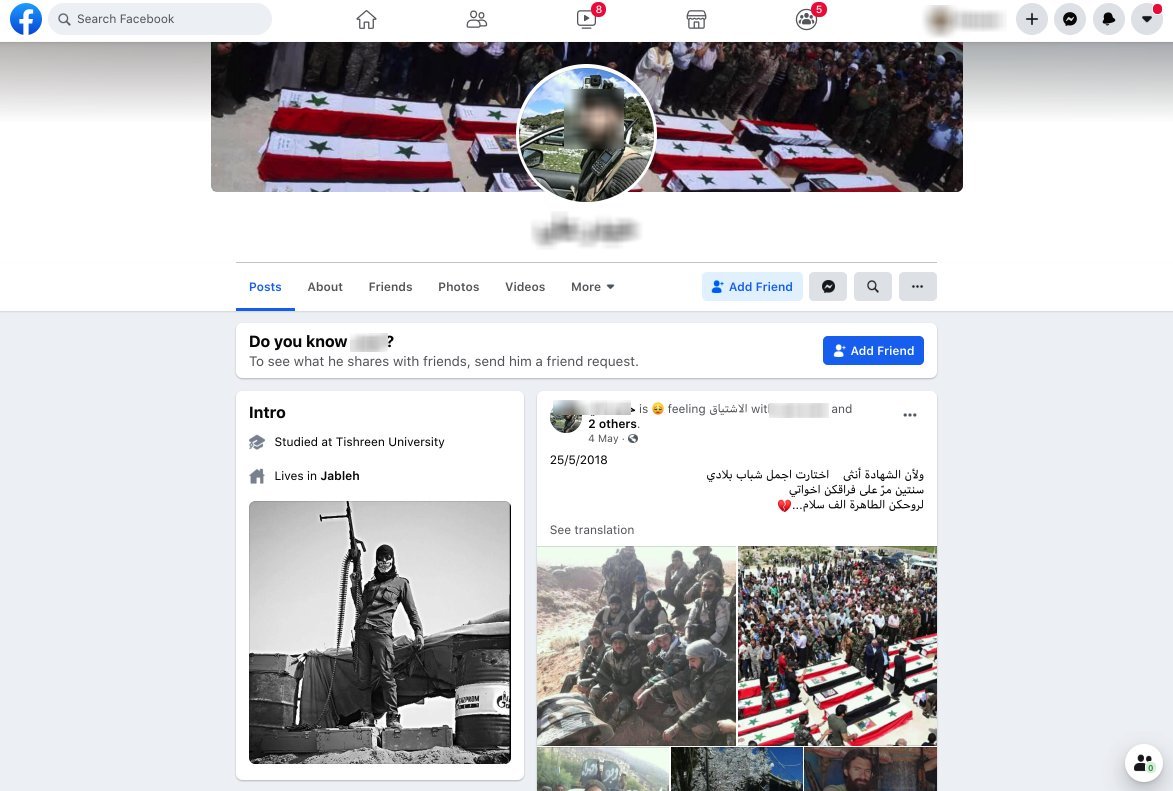

As with emerging violent groups here in the U.S., Hezbollah accounts are pretty easy to locate, operating in plain sight as opposed to in private or secret Facebook Groups. Multiple designated Hezbollah entities have linked to their Facebook pages from their official websites, or can be quickly located if one searches on the actual name of the entity.

A Facebook screenshot logged by an ACCO researcher in June 2019, from Hezbollah’s “Electronic Resistance” news site provides a striking example of just how integral Facebook has become to Hezbollah’s public outreach. They actually integrated Facebook’s logo into the Hezbollah logo – the iconic hand holding an assault rifle.

As with recent Facebook efforts to ban the militia indicted for its plot to kidnap the Michigan governor, Facebook does occasionally remove pages linked to Hezbollah. But the pages tend to reappear weeks or months later, and quickly build a solid following.

Moderation Failures: The Christchurch Example

A striking example of the inefficiency of social media moderation systems is the March 2019 Christchurch massacre, when a lone gunman committed and live streamed on Facebook two consecutive terrorist attacks at mosques in New Zealand.

Facebook’s AI tools failed to catch the livestream because it was not trained to look at violent content from the point of view of the killer. Later, Facebook would admit that there is no technology available that would allow for the foolproof detection of violence on streaming platforms. In fact, it’s difficult for computers to even tell the difference between real violence and fictional films.

Facebook’s reliance on users reporting the video also failed. Even though two hundred people watched the massacre live, there were no reports about its graphic nature until 12 minutes after the livestream ended.

Three days after the mass shooting, Facebook said it removed 1.5 million copies of the video in the first 24 hours after the massacre, most of it which was removed as it was being uploaded. One year later, the firm was still struggling to remove copies of the attack from its platform.

Once violent content gets past Facebook’s faulty AI system, the firm tends to fail to remove it. For example, ISIS, one of the most notable terrorist groups on social media, releases, on average 38 new items per day on the Internet—20-minute videos, full-length documentaries, photo essays, audio clips, and pamphlets, in languages ranging from Russian to Bengali.

According to the report Spiders of the Caliphate by ACCO expert Counter Extremism Project (CEP), 57% of the 1,000 pro-ISIS accounts they identified were not removed by Facebook in a 6-month period. Even more concerning than the removal rate was Facebook’s “Suggested Friends” tool, which recommended other ISIS supporters, propagandists and fighters. An internal study by Facebook, which was released by the Wall Street Journal, had similar conclusions, admitting that, “64% of all extremist group joins are due to our recommendation tools.”

Facebook’s auto-generating features even create original terrorist content. A May 2019 whistleblower petition showed that Facebook auto-generated a “Local Business” page for Al Qaeda, which garnered 7.410 Likes. Facebook would also auto-generate “Celebrations” and “Memories” for friends of terrorists and extremists, sometimes depicting extremely violent content.

CDA 230

For years, Facebook has made misleading claims about their capacity to remove extremist and terrorist content, claiming that their AI systems identify 99% of the violent content that is taken down before anyone sees it. ACCO expert CEP, however, has found that when reported, terror content from groups like ISIS and Hezbollah is flagged and removed only 38% of the time.

Under Section 230 of the Communications Decency Act, tech firms are not liable for hosting terror content, even if it results in real-world violence. This law, which was enacted in 1996, states that technology platforms are expected to moderate illegal activity on their platforms at their own discretion in exchange for immunity from liability for any illicit activity that occurs. Originally enacted to allow the tech industry to grow, it now shields tech giants from any real responsibility from hosting extremist and terrorist content on their platforms.

It’s time this law gets reformed. Help us stop online extremism from growing by SIGNING OUR PETITION